Published

-

Apache Kafka Explained

What is Kafka and Why Do We Need It?

Real-World Problem: Uber Example

Let’s understand with a simple example. Suppose there is a company called Uber. In Uber, there are:

- Drivers (pilots)

- Customers

The Problem:

- Customer books a ride

- Customer wants to track the driver’s live location

- Driver sends his location coordinates every second to the UBER server

- This location data needs to be:

- Saved in database for analysis

- Sent to customer for live tracking

- etc

Let’s do the math:

- Suppose a driver sends location every second

- If it takes 30 minutes to reach customer location

- 30 minutes = 30 × 60 = 1800 seconds

- This means 1800 records will be saved in database for just one ride!

Now imagine:

- 1,00,000 (1Lakh) active rides at the same time

- Each sending location every second

- Database will receive insertions 100000 × 1800 = 18,000,000 (18 crore) records in 30 minutes

- Database operations per second will be very high

- System might crash due to high load

Database Throughput Problem

What is Throughput?

- Throughput means how many operations (read/write) a database can handle in a particular time

- If we do too many operations, throughput becomes very high

- High throughput can crash the system

Traditional Database Issues:

- Low throughput capacity

- Cannot handle millions of real-time operations

- Gets slow with heavy traffic

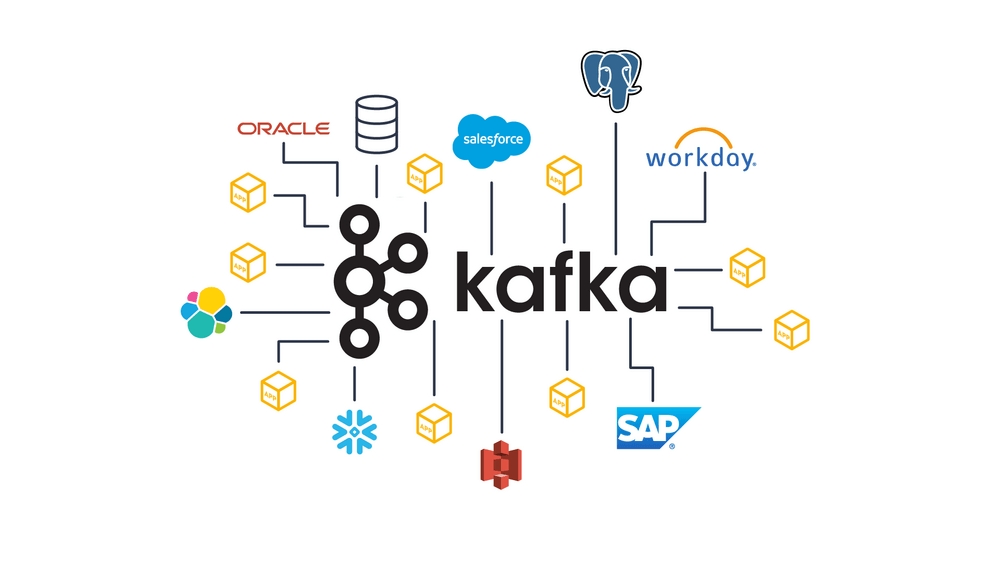

Kafka - The Solution

What is Kafka?

- Primary Identity: Distributed Event Streaming Platform (A system that captures and shares data at the very moment it happens)

- Secondary Use: Can work as a Message Broker

- Kafka has very high throughput (millions of events per second)

- It is NOT a replacement for database

- Kafka provides persistent event storage with configurable retention

- Database has permanent storage but limited real-time throughput

Key Difference from Traditional Message Brokers:

- Message Broker: Messages deleted after consumption

- Event Streaming: Events stored persistently, can be replayed

How Kafka Helps:

- Handles millions of events per second

- Acts as a buffer between data producers and consumers

- Prevents database overload

- Events can be replayed for reprocessing

- Multiple consumers can read same events

- Enables real-time stream processing

Kafka Architecture

Basic Components

[Producer] -----> [Kafka Server] -----> [Consumer]

(Driver App) (Message Broker) (Location Service)

|

|

┌─────────┐

│ Topic 1 │ (driver-locations)

│ Topic 2 │ (ride-requests)

│ Topic 3 │ (payments)

└─────────┘

Components:

- Producer: Sends data to Kafka (like Uber driver sending location)

- Kafka Server: Stores and manages data temporarily

- Consumer: Reads data from Kafka (like location service sending data to customer)

- Topics: Categories where messages are stored (like “driver-locations”, “ride-requests”)

Topics and Partitions

What are Topics?

- Topics are like folders where similar messages are stored

- Example topics: “driver-locations”, “payment-data”, “ride-bookings”

- Definition: A topic is like a category or channel where messages are stored in Kafka.

What are Partitions?

- Each topic is divided into smaller parts called partitions

- This helps in parallel processing

- More partitions = faster processing

Topic: driver-locations

┌─────────────────────────────────────────────────────────────┐

│ │

│ Partition 0 Partition 1 Partition 2 │

│ (North India) (South India) (East India) │

│ ┌──────────┐ ┌──────────┐ ┌──────────┐ │

│ │ Message1 │ │ Message3 │ │ Message5 │ │

│ │ Message2 │ │ Message4 │ │ Message6 │ │

│ └──────────┘ └──────────┘ └──────────┘ │

│ ↓ ↓ ↓ │

│ Consumer 1 Consumer 2 Consumer 3 │

└─────────────────────────────────────────────────────────────┘

Consumer Groups and Load Balancing

Why Do We Need Consumer Groups?

🤔 Problem Without Consumer Groups:

Topic with 1 Million Messages

┌─────────────────────────────┐

│ Message 1, Message 2, ... │

│ Message 999,999 │

│ Message 1,000,000 │

└─────────────────────────────┘

│

▼

┌─────────────┐

│ Single │ ← Takes 10 hours to process all messages!

│ Consumer │

└─────────────┘

✅ Solution With Consumer Groups:

Topic with 1 Million Messages (4 Partitions)

┌─────────┐ ┌─────────┐ ┌─────────┐ ┌─────────┐

│250K msgs│ │250K msgs│ │250K msgs│ │250K msgs│

│ P0 │ │ P1 │ │ P2 │ │ P3 │

└─────────┘ └─────────┘ └─────────┘ └─────────┘

│ │ │ │

▼ ▼ ▼ ▼

┌─────────┐ ┌─────────┐ ┌─────────┐ ┌─────────┐

│Consumer │ │Consumer │ │Consumer │ │Consumer │

│ 1 │ │ 2 │ │ 3 │ │ 4 │

└─────────┘ └─────────┘ └─────────┘ └─────────┘

↓

Takes only 2.5 hours! (4x faster)

🎯 Benefits of Consumer Groups:

- ⚡ Parallel Processing: Multiple consumers work simultaneously

- 🔄 Load Distribution: Work is automatically divided

- 🛡️ Fault Tolerance: If one consumer fails, others continue

- 📈 Scalability: Add more consumers to handle more load

- 🎭 Multiple Use Cases: Different groups can process same data differently

🏢 Real-World Example (E-commerce):

Order Topic → Consumer Group 1 (Inventory Service) ← Updates stock

→ Consumer Group 2 (Payment Service) ← Processes payment

→ Consumer Group 3 (Email Service) ← Sends confirmation

→ Consumer Group 4 (Analytics Service) ← Records metrics

Each service gets the same order data but processes it for different purposes!

Auto-Balancing Rules

Important Rule:

- One partition can be consumed by only ONE consumer at a time within the same group

- One consumer can handle multiple partitions

- This ensures no message is processed twice

Different Scenarios

Scenario 1: 1 Consumer, 4 Partitions

Topic with 4 Partitions:

┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐

│ P0 │ │ P1 │ │ P2 │ │ P3 │

└─────┘ └─────┘ └─────┘ └─────┘

│ │ │ │

└───────┼───────┼───────┘

└───────┘

│

┌──────────┐

│Consumer 1│ (handles ALL partitions)

└──────────┘

Result: Consumer 1 handles all 4 partitions (P0, P1, P2, P3)

Scenario 2: 2 Consumers, 4 Partitions

Topic with 4 Partitions:

┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐

│ P0 │ │ P1 │ │ P2 │ │ P3 │

└─────┘ └─────┘ └─────┘ └─────┘

│ │ │ │

└───────┘ └───────┘

│ │

┌──────────┐ ┌──────────┐

│Consumer 1│ │Consumer 2│

│ (P0, P1) │ │ (P2, P3) │

└──────────┘ └──────────┘

Result: Each consumer handles 2 partitions (Auto-balanced)

Scenario 3: 3 Consumers, 4 Partitions

Topic with 4 Partitions:

┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐

│ P0 │ │ P1 │ │ P2 │ │ P3 │

└─────┘ └─────┘ └─────┘ └─────┘

│ │ │ │

└───────┘ │ │

│ │ │

┌──────────┐ ┌─────────┐ ┌─────────┐

│Consumer 1│ │Consumer │ │Consumer │

│ (P0, P1) │ │ 2 │ │ 3 │

└──────────┘ │ (P2) │ │ (P3) │

└─────────┘ └─────────┘

Result: Consumer 1 gets 2 partitions, Consumer 2 and 3 get 1 partition each

Scenario 4: 4 Consumers, 4 Partitions (Perfect Balance!)

Topic with 4 Partitions:

┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐

│ P0 │ │ P1 │ │ P2 │ │ P3 │

└─────┘ └─────┘ └─────┘ └─────┘

│ │ │ │

│ │ │ │

┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐

│Con 1│ │Con 2│ │Con 3│ │Con 4│

│(P0) │ │(P1) │ │(P2) │ │(P3) │

└─────┘ └─────┘ └─────┘ └─────┘

Result: Perfect balance - each consumer gets exactly 1 partition

Scenario 5: 5 Consumers, 4 Partitions (1 Consumer IDLE!)

Topic with 4 Partitions:

┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐

│ P0 │ │ P1 │ │ P2 │ │ P3 │

└─────┘ └─────┘ └─────┘ └─────┘

│ │ │ │

│ │ │ │

┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐ ┌─────────┐

│Con 1│ │Con 2│ │Con 3│ │Con 4│ │Consumer │

│(P0) │ │(P1) │ │(P2) │ │(P3) │ │ 5 │

└─────┘ └─────┘ └─────┘ └─────┘ │ (IDLE) │

└─────────┘

Result: Consumer 5 remains IDLE (no work to do)

Multiple Consumer Groups

Important Concept:

- Different consumer groups can read the same data

- Each group processes data independently

Topic: driver-locations

┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐

│ P0 │ │ P1 │ │ P2 │ │ P3 │

└─────┘ └─────┘ └─────┘ └─────┘

│ │ │ │

├───────┼───────┼───────┤ (Both groups read same data)

│ │ │ │

┌────────┴───────┴───────┴───────┴────────┐

│ Consumer Group 1 │

│ (Location Service) │

│ ┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐ │

│ │Con 1│ │Con 2│ │Con 3│ │Con 4│ │

│ │(P0) │ │(P1) │ │(P2) │ │(P3) │ │

│ └─────┘ └─────┘ └─────┘ └─────┘ │

└──────────────────────────────────────────┘

│ │ │ │

┌────────┴───────┴───────┴───────┴────────┐

│ Consumer Group 2 │

│ (Analytics Service) │

│ ┌─────────────────┐ │

│ │ Consumer 1 │ │

│ │ (All P0-P3) │ │

│ └─────────────────┘ │

└──────────────────────────────────────────┘

Use Case:

- Group 1: Sends live location to customers (4 consumers for speed)

- Group 2: Stores data for analytics and reporting (1 consumer is enough)

Queue vs Pub/Sub in Kafka

Traditional Systems

- SQS/RabbitMQ: Only Queue system

- Kafka: Supports both Queue and Pub/Sub

Queue Behavior in Kafka (Within Same Group)

Producer ──┐

│

Producer ──┤──── Topic (4 Partitions) ──── Consumer Group (4 Consumers)

│ P0, P1, P2, P3 │

Producer ──┘ ├─ Consumer 1 ← P0

├─ Consumer 2 ← P1

├─ Consumer 3 ← P2

└─ Consumer 4 ← P3

📌 Each partition goes to ONLY ONE consumer in the group

📌 Each consumer handles exactly one partition (perfect balance!)

📌 Work is divided among consumers (no duplication)

📌 Perfect for task distribution

✅ Ideal Scenario: Topic Partitions = Consumer Count (4 = 4)

Detailed Queue Behavior Cases:

Case 1: Consumers = Partitions (Perfect Balance)

- Each consumer gets exactly one partition.

- Messages in a partition are read by only one consumer.

- This behaves just like a queue:

- Work is evenly distributed.

- No duplication inside the group. ✅ Queue-like behavior achieved.

Case 2: Consumers < Partitions (Some consumers handle more)

- Some consumers handle multiple partitions.

- Still queue-like, but less parallelism (some consumers get more load).

- Example: 2 consumers, 4 partitions = each consumer handles 2 partitions

Case 3: Consumers > Partitions (Some consumers idle)

- Some consumers will be idle.

- Kafka won’t assign more than one consumer to the same partition (inside a group).

- This means adding consumers beyond partitions won’t increase throughput.

- Example: 6 consumers, 4 partitions = 2 consumers will be idle

Pub/Sub Twist:

Even in the “queue” case above (1 consumer per partition), if you add another consumer group, they will all get their own copy of the data (that’s the pub/sub behavior).

Pub/Sub Behavior in Kafka (Different Groups)

Producer ──┐

│

Producer ──┤──── Topic ─────┬──── Consumer Group 1 (Location Service)

│ │

Producer ──┘ ├──── Consumer Group 2 (Analytics Service)

│

└──── Consumer Group 3 (Billing Service)

📌 Each GROUP gets ALL messages

📌 Same message goes to all groups

📌 Perfect for broadcasting information

KafkaJS Implementation Example

Before we dive into Kafka’s architecture evolution, let’s see how to actually use Kafka in a Node.js application using KafkaJS library.

Installation

npm install kafkajs

🔹 Producer (Send Messages)

// producer.js

const { Kafka } = require('kafkajs')

async function runProducer() {

const kafka = new Kafka({

clientId: 'my-producer',

brokers: ['localhost:9092'] // match your Kafka advertised listener

})

const producer = kafka.producer()

await producer.connect()

for (let i = 0; i < 5; i++) {

await producer.send({

topic: 'demo-topic',

messages: [{ key: `key-${i}`, value: `Hello KafkaJS ${i}` }]

})

console.log(`✅ Sent message ${i}`)

}

await producer.disconnect()

}

runProducer().catch(console.error)

🤔 What if Topic Doesn’t Exist?

🔹 Case 1: auto.create.topics.enable=true (Bitnami default)

- Kafka automatically creates the topic on first message

- Uses default settings:

1 partition,replication.factor=1 - ✅ Your producer works without manual topic creation

🔹 Case 2: auto.create.topics.enable=false (Production setup)

- Kafka rejects the message with error:

UNKNOWN_TOPIC_OR_PARTITION

- ❌ You must manually create topic first using Kafka UI or CLI

💡 Best Practice: Always create topics manually in production for better control over partitions and replication.

🔹 Consumer (Consume with a Group)

// consumer.js

const { Kafka } = require('kafkajs')

async function runConsumer() {

const kafka = new Kafka({

clientId: 'my-consumer',

brokers: ['localhost:9092']

})

const consumer = kafka.consumer({ groupId: 'demo-group' })

await consumer.connect()

await consumer.subscribe({ topic: 'demo-topic', fromBeginning: true })

await consumer.run({

eachMessage: async ({ topic, partition, message }) => {

console.log(

`📩 Received: topic=${topic} partition=${partition} key=${message.key?.toString()} value=${message.value.toString()}`

)

}

})

}

runConsumer().catch(console.error)

🔹 Run the Demo

Step 1: Make sure your Kafka is running (using the Docker setup above)

Step 2: Start consumer first:

node consumer.js

Step 3: In another terminal, start producer:

node producer.js

Expected Output:

Producer Terminal:

✅ Sent message 0

✅ Sent message 1

✅ Sent message 2

✅ Sent message 3

✅ Sent message 4

Consumer Terminal:

📩 Received: topic=demo-topic partition=0 key=key-0 value=Hello KafkaJS 0

📩 Received: topic=demo-topic partition=0 key=key-1 value=Hello KafkaJS 1

📩 Received: topic=demo-topic partition=0 key=key-2 value=Hello KafkaJS 2

📩 Received: topic=demo-topic partition=0 key=key-3 value=Hello KafkaJS 3

📩 Received: topic=demo-topic partition=0 key=key-4 value=Hello KafkaJS 4

🔹 Key Points to Notice

- Client ID: Identifies your application to Kafka

- Brokers: List of Kafka server addresses

- Group ID: Consumer group for load balancing

- Topic: Where messages are stored

- fromBeginning: true: Read all messages from start of topic

- Key-Value: Messages can have both key and value

- Partition Info: Consumer shows which partition message came from

🔹 Testing Different Consumer Groups

Create another consumer with different group ID:

// consumer2.js

const consumer = kafka.consumer({ groupId: 'demo-group-2' }) // Different group!

Run both consumers and one producer - both consumers will receive ALL messages (Pub/Sub behavior)!

Producer → Topic ┬→ Consumer Group 1 (gets all messages)

└→ Consumer Group 2 (gets all messages)

ZooKeeper vs KRaft

Old Architecture (With ZooKeeper) - Before 2021

┌─────────────────┐

│ ZooKeeper │ ← External dependency

│ Cluster │ (Extra complexity)

└─────────┬───────┘

│

┌─────────────┼─────────────┐

│ │ │

┌──────────┐ ┌──────────┐ ┌──────────┐

│ Kafka │ │ Kafka │ │ Kafka │

│ Broker 1 │ │ Broker 2 │ │ Broker 3 │

└──────────┘ └──────────┘ └──────────┘

ZooKeeper was needed for:

✓ Managing Kafka brokers

✓ Storing configuration data

✓ Leader election for partitions

New Architecture (KRaft Mode) - After 2021

┌─────────────────────────────────────────────┐

│ Kafka Cluster (KRaft Mode) │

│ │

│ ┌──────────┐ ┌──────────┐ ┌──────────┐ │

│ │ Kafka │ │ Kafka │ │ Kafka │ │

│ │ Broker 1 │ │ Broker 2 │ │ Broker 3 │ │

│ │Controller│ │ │ │ │ │

│ └──────────┘ └──────────┘ └──────────┘ │

└─────────────────────────────────────────────┘

KRaft Benefits:

✓ No external ZooKeeper needed

✓ Simpler deployment

✓ Better performance

✓ Self-managing

Docker Setup for Kafka

Complete Docker Compose Configuration

# docker-compose.yml

version: '3.8'

services:

kafka:

image: bitnami/kafka:3.6.1

container_name: kafka

ports:

- '9092:29092' # External port for your applications

environment:

# KRaft mode (no ZooKeeper needed!)

- KAFKA_ENABLE_KRAFT=yes

- KAFKA_CFG_NODE_ID=1

- KAFKA_CFG_PROCESS_ROLES=broker,controller

# Network configuration

- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093,EXTERNAL://:29092

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://kafka:9092,EXTERNAL://localhost:9092

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=PLAINTEXT:PLAINTEXT,CONTROLLER:PLAINTEXT,EXTERNAL:PLAINTEXT

# Controller settings

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

- KAFKA_CFG_CONTROLLER_QUORUM_VOTERS=1@kafka:9093

- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=PLAINTEXT

# Development settings (not for production!)

- ALLOW_PLAINTEXT_LISTENER=yes

networks:

- kafka-network

# Web UI to manage Kafka easily

kafka-ui:

image: provectuslabs/kafka-ui

container_name: kafka-ui

ports:

- '8080:8080' # Access at http://localhost:8080

environment:

- KAFKA_CLUSTERS_0_NAME=my-kafka-cluster

- KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS=kafka:9092

depends_on:

- kafka

networks:

- kafka-network

networks:

kafka-network:

driver: bridge

How to Run

# 1. Save above content in docker-compose.yml file

# 2. Start Kafka cluster

docker-compose up -d

# 3. Check if containers are running

docker ps

# You should see:

# - kafka container running on port 9092

# - kafka-ui container running on port 8080

# 4. Access Kafka UI in browser

# Open: http://localhost:8080

# 5. Stop the cluster when done

docker-compose down

Real-World Example: Uber Location System

Let’s see how Uber actually uses Kafka:

Step 1: Driver sends location

┌─────────────┐

│ Driver App │ ──── GPS Location ────┐

└─────────────┘ │

▼

Step 2: Data goes to Kafka ┌────────┐

┌─────────────┐ │ Kafka │

│ Producer │ ──── Location ─▶│ Topic │

│ (Driver API)│ Message └────┬───┘

└─────────────┘ │

│

Step 3: Multiple services consume │

┌─────────────┐ ◀────────────────────┤

│ Customer │ Live location │

│ App API │ updates │

└─────────────┘ │

│

┌─────────────┐ ◀────────────────────┤

│ Analytics │ Store location │

│ Service │ for reporting │

└─────────────┘ │

│

┌─────────────┐ ◀────────────────────┘

│ Billing │ Calculate fare

│ Service │ based on route

└─────────────┘

Benefits:

- High Speed: Can handle millions of location updates per second

- No Data Loss: Even if customer app is down, location data is saved

- Scalable: Can add more consumers as business grows

- Multiple Uses: Same location data used for tracking, analytics, billing

Key Concepts Summary

📊 Throughput Comparison

Traditional Database: 🐌 Slow

┌─────────────────────────────────┐

│ ▓▓▓ (1000 operations/sec) │

└─────────────────────────────────┘

Apache Kafka: 🚀 Super Fast

┌─────────────────────────────────┐

│ ▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓ │

└─────────────────────────────────┘

(Millions of operations/sec)

🔄 Partitions Enable Parallel Processing

- More partitions = Better performance

- One partition = One consumer per group

- Perfect balance when partitions = consumers

👥 Consumer Groups Enable Flexibility

- Same Group: Queue behavior (work sharing)

- Different Groups: Pub/Sub behavior (data copying)

- Automatic load balancing

- Fault tolerance

🏗️ KRaft vs ZooKeeper

- Old Way: Kafka + ZooKeeper (complex)

- New Way: Kafka only (simple)

- Better performance and easier management

Common Use Cases

1. 🚗 Real-time Location Tracking

Driver/Delivery Apps → Kafka → Customer Apps + Analytics

2. 🛒 E-commerce Order Processing

Order → Kafka → Inventory + Payment + Shipping + Email

3. 📱 Social Media Feed

User Posts → Kafka → Timeline + Recommendations + Analytics

4. 🏦 Banking Transactions

ATM/App → Kafka → Account Update + Fraud Check + SMS + Email

5. 📊 Log Management

App Logs → Kafka → Monitoring + Storage + Alerts

Best Practices (Important for Interviews!)

1. 📝 Topic Design

- Use clear topic names:

user-events,order-created - Plan partitions based on expected message volume

- More partitions = better parallelism (but not too many!)

2. 👥 Consumer Groups

- Use meaningful group IDs:

payment-service,notification-service - Monitor consumer lag (how far behind consumers are)

- Handle rebalancing properly

3. ⚡ Performance Tips

- Right number of partitions (usually 2-3 times number of consumers)

- Monitor disk space regularly

- Use appropriate batch sizes for better throughput

4. 🛡️ Reliability

- Set replication factor to at least 3 for production

- Use proper acknowledgment settings for critical data

- Implement retry logic for failed messages

Quick Interview Questions & Answers

Q1: What is Kafka and why use it?

Answer: Kafka is a high-throughput message broker that handles millions of messages per second. We use it when databases can’t handle real-time data load, like in Uber’s live tracking system.

Q2: Can one partition be consumed by multiple consumers?

Answer: No! Within the same consumer group, one partition can be consumed by only ONE consumer. But different consumer groups can read from the same partition.

Q3: What happens if we have more consumers than partitions?

Answer: Extra consumers become IDLE. If we have 4 partitions and 6 consumers, 2 consumers will have no work.

Q4: Queue vs Pub/Sub in Kafka?

Answer:

- Queue: Same consumer group, messages divided among consumers

- Pub/Sub: Different consumer groups, all groups get all messages

Q5: ZooKeeper vs KRaft?

Answer: Old Kafka needed external ZooKeeper for coordination. New Kafka (KRaft mode) manages everything internally, making it simpler and faster.

Q6: Does Kafka act as a message broker or distributed event streaming platform?

Answer: Kafka is primarily a distributed event streaming platform, not just a message broker. Unlike traditional message brokers that delete messages after consumption, Kafka stores events persistently and allows multiple consumers to replay the same events. This enables real-time analytics, stream processing, and event-driven architectures. While it can work as a message broker, that’s just one of its capabilities.